| NEWS |

Tuesday, April 13, 2010

Data allocation in B-tree

you can get the index depth by querying the sys.dm_db_index_physical_stats dynamic management view.

In general, the only thing that determines the index depth is the total number of rows, and the size of the index key (more specifically how many keys will fit on an 8,096 byte page).

With 10K rows in the table, there will be 10K rows in the leaf level (assuming this is not a filtered index). The maximum width of an index key is 900 bytes. Taking this as the worst-case scenario, each (non-leaf) page can contain a maximum of 8 index keys. So:

Leaf: 10K rows

Level 1: 10,000 / 8 = 1,250 pages

Level 2: 1,250 / 8 = 157 pages

Level 3: 157 / 8 = 20 pages

Level 4: 20 / 8 = 3 pages

Level 5: 3 / 8 = 1 page (root)

So, the index would have 6 levels, including the leaf.

If the key size were just 16 bytes, we could fit 506 index keys on a page:

Leaf: 10K rows

Level 1: 10,000 / 506 = 20 pages

Level 2: 20 / 506 = 1 page (root)

So in this case, there would be only 2 non-leaf levels.

For more details of B+ trees as used by SQL Server, see http://en.wikipedia.org/wiki/B%2B_tree

Monday, April 12, 2010

Asp.Net 2.0 Page Directives

Here’s an example of the page directive.,

<%@ Page Language="C#" AutoEventWireup="true" CodeFile="Sample.aspx.cs" Inherits="Sample" Title="Sample Page Title" %>

Totally there are 11 types of Pages directives in Asp.Net 2.0. Some directives are very important without which we cannot develop any web applications in Asp.Net. Some directives are used occasionally according to its necessity. When used, directives can be located anywhere in an .aspx or .ascx file, though standard practice is to include them at the beginning of the file. Each directive can contain one or more attributes (paired with values) that are specific to that directive.

Asp.Net web form page framework supports the following directives

1. @Page

2. @Master

3. @Control

4. @Register

5. @Reference

6. @PreviousPageType

7. @OutputCache

8. @Import

9. @Implements

10. @Assembly

11. @MasterType

@Page Directive

The @Page directive enables you to specify attributes and values for an Asp.Net Page to be used when the page is parsed and compiled. Every .aspx files should include this @Page directive to execute. There are many attributes belong to this directive. We shall discuss some of the important attributes here.

a. AspCompat: When set to True, this allows to the page to be executed on a single-threaded apartment. If you want to use a component developed in VB 6.0, you can set this value to True. But setting this attribute to true can cause your page's performance to degrade.

b. Language: This attribute tells the compiler about the language being used in the code-behind. Values can represent any .NET-supported language, including Visual Basic, C#, or JScript .NET.

c. AutoEventWireup: For every page there is an automatic way to bind the events to methods in the same .aspx file or in code behind. The default value is true.

d. CodeFile: Specifies the code-behid file with which the page is associated.

e. Title: To set the page title other than what is specified in the master page.

f. Culture: Specifies the culture setting of the page. If you set to auto, enables the page to automatically detect the culture required for the page.

g. UICulture: Specifies the UI culture setting to use for the page. Supports any valid UI culture value.

h. ValidateRequest: Indicates whether request validation should occur. If set to true, request validation checks all input data against a hard-coded list of potentially dangerous values. If a match occurs, an HttpRequestValidationException Class is thrown. The default is true. This feature is enabled in the machine configuration file (Machine.config). You can disable it in your application configuration file (Web.config) or on the page by setting this attribute to false.

i. Theme: To specify the theme for the page. This is a new feature available in Asp.Net 2.0.

j. SmartNavigation: Indicates the smart navigation feature of the page. When set to True, this returns the postback to current position of the page. The default value is false.

k. MasterPageFile: Specify the location of the MasterPage file to be used with the current Asp.Net page.

l. EnableViewState: Indicates whether view state is maintained across page requests. true if view state is maintained; otherwise, false. The default is true.

m. ErrorPage: Specifies a target URL for redirection if an unhandled page exception occurs.

n. Inherits: Specifies a code-behind class for the page to inherit. This can be any class derived from the Page class.

There are also other attributes which are of seldom use such as Buffer, CodePage, ClassName, EnableSessionState, Debug, Description, EnableTheming, EnableViewStateMac, TraceMode, WarningLevel, etc. Here is an example of how a @Page directive looks

<%@ Page Language="C#" AutoEventWireup="true" CodeFile="Sample.aspx.cs" Inherits="Sample" Title="Sample Page Title" %>

@Master Directive

The @Master directive is quite similar to the @Page directive. The @Master directive belongs to Master Pages that is .master files. The master page will be used in conjunction of any number of content pages. So the content pages can the inherits the attributes of the master page. Even though, both @Page and @Master page directives are similar, the @Master directive has only fewer attributes as follows

a. Language: This attribute tells the compiler about the language being used in the code-behind. Values can represent any .NET-supported language, including Visual Basic, C#, or JScript .NET.

b. AutoEventWireup: For every page there is an automatic way to bind the events to methods in the same master file or in code behind. The default value is True.

c. CodeFile: Specifies the code-behid file with which the MasterPage is associated.

d. Title: Set the MasterPage Title.

e. MasterPageFile: Specifies the location of the MasterPage file to be used with the current MasterPage. This is called as Nested Master Page.

f. EnableViewState: Indicates whether view state is maintained across page requests. true if view state is maintained; otherwise, false. The default is true.

g. Inherits: Specifies a code-behind class for the page to inherit. This can be any class derived from the Page class.

Here is an example of how a @Master directive looks

<%@ Master Language="C#" AutoEventWireup="true" CodeFile="WebMaster.master.cs" Inherits="WebMaster" %>

@Control Directive

The @Control directive is used when we build an Asp.Net user controls. The @Control directive helps us to define the properties to be inherited by the user control. These values are assigned to the user control as the page is parsed and compiled. The attributes of @Control directives are

a. Language: This attribute tells the compiler about the language being used in the code-behind. Values can represent any .NET-supported language, including Visual Basic, C#, or JScript .NET.

b. AutoEventWireup: For every page there is an automatic way to bind the events to methods in the same .ascx file or in code behind. The default value is true.

c. CodeFile: Specifies the code-behid file with which the user control is associated.

d. EnableViewState: Indicates whether view state is maintained across page requests. true if view state is maintained; otherwise, false. The default is true.

e. Inherits: Specifies a code-behind class for the page to inherit. This can be any class derived from the Page class.

f. Debug: Indicates whether the page should be compiled with debug symbols.

g. Src: Points to the source file of the class used for the code behind of the user control.

The other attributes which are very rarely used is ClassName, CompilerOptions, ComplieWith, Description, EnableTheming, Explicit, LinePragmas, Strict and WarningLevel.

Here is an example of how a @Control directive looks

<%@ Control Language="C#" AutoEventWireup="true" CodeFile="MyControl.ascx.cs" Inherits=" MyControl " %>

@Register Directive

The @Register directive associates aliases with namespaces and class names for notation in custom server control syntax. When you drag and drop a user control onto your .aspx pages, the Visual Studio 2005 automatically creates an @Register directive at the top of the page. This register the user control on the page so that the control can be accessed on the .aspx page by a specific name.

The main atttribues of @Register directive are

a. Assembly: The assembly you are associatin with the TagPrefix.

b. Namespace: The namspace to relate with TagPrefix.

c. Src: The location of the user control.

d. TagName: The alias to relate to the class name.

e. TagPrefix: The alias to relate to the namespace.

Here is an example of how a @Register directive looks

<%@ Register Src="Yourusercontrol.ascx" TagName=" Yourusercontrol " TagPrefix="uc1" Src="~\usercontrol\usercontrol1.ascx" %>

@Reference Directive

The @Reference directive declares that another asp.net page or user control should be complied along with the current page or user control. The 2 attributes for @Reference direcive are

a. Control: User control that ASP.NET should dynamically compile and link to the current page at run time.

b. Page: The Web Forms page that ASP.NET should dynamically compile and link to the current page at run time.

c. VirutalPath: Specifies the location of the page or user control from which the active page will be referenced.

Here is an example of how a @Reference directive looks

<%@ Reference VirutalPath="YourReferencePage.ascx" %>

@PreviousPageType Directive

The @PreviousPageType is a new directive makes excellence in asp.net 2.0 pages. The concept of cross-page posting between Asp.Net pages is achieved by this directive. This directive is used to specify the page from which the cross-page posting initiates. This simple directive contains only two attibutes

a. TagName: Sets the name of the derived class from which the postback will occur.

b. VirutalPath: sets the location of the posting page from which the postback will occur.

Here is an example of @PreviousPageType directive

<%@ PreviousPageType VirtualPath="~/YourPreviousPageName.aspx" %>

@OutputCache Directive

The @OutputCache directive controls the output caching policies of the Asp.Net page or user control. You can even cache programmatically through code by using Visual Basic .NET or Visual C# .NET. The very important attributes for the @OutputCache directive are as follows

Duration: The duration of time in seconds that the page or user control is cached.

Location: To specify the location to store the output cache. To store the output cache on the browser client where the request originated set the value as ‘Client’. To store the output cache on any HTTP 1.1 cache-capable devices including the proxy servers and the client that made request, specify the Location as Downstream. To store the output cache on the Web server, mention the location as Server.

VaryByParam: List of strings used to vary the output cache, separated with semi-colon.

VaryByControl: List of strings used to vary the output cache of a user Control, separated with semi-colon.

VaryByCustom: String of values, specifies the custom output caching requirements.

VaryByHeader: List of HTTP headers used to vary the output cache, separated with semi-colon.

The other attribues which is rarely used are CacheProfile, DiskCacheable, NoStore, SqlDependency, etc.

<%@ OutputCache Duration="60" Location="Server" VaryByParam="None" %>

To turn off the output cache for an ASP.NET Web page at the client location and at the proxy location, set the Location attribute value to none, and then set the VaryByParam value to none in the @ OutputCache directive. Use the following code samples to turn off client and proxy caching.

<%@ OutputCache Location="None" VaryByParam="None" %>

@Import Directive

The @Import directive allows you to specify any namespaces to the imported to the Asp.Net pages or user controls. By importing, all the classes and interfaces of the namespace are made available to the page or user control. The example of the @Import directive

<%@ Import namespace=”System.Data” %>

<%@ Import namespace=”System.Data.SqlClient” %>

@Implements Directive

The @Implements directive gets the Asp.Net page to implement a specified .NET framework interface. The only single attribute is Interface, helps to specify the .NET Framework interface. When the Asp.Net page or user control implements an interface, it has direct access to all its events, methods and properties.

<%@ Implements Interface=”System.Web.UI.IValidator” %>

@Assembly Directive

The @Assembly directive is used to make your ASP.NET page aware of external components. This directive supports two attributes:

a. Name: Enables you specify the name of an assembly you want to attach to the page. Here you should mention the filename without the extension.

b. Src: represents the name of a source code file

<%@ Assembly Name="YourAssemblyName" %>

@MasterType Directive

To access members of a specific master page from a content page, you can create a strongly typed reference to the master page by creating a @MasterType directive. This directive supports of two attributes such as TypeName and VirtualPath.

a. TypeName: Sets the name of the derived class from which to get strongly typed references or members.

b. VirtualPath: Sets the location of the master page from which the strongly typed references and members will be retrieved.

If you have public properties defined in a Master Page that you'd like to access in a strongly-typed manner you can add the MasterType directive into a page as shown next

<%@ MasterType VirtualPath="MasterPage.master" %>

this.Master.HeaderText = "Label updated using MasterType directive with VirtualPath attribute";

Thursday, April 8, 2010

WCF Advantages over Web Service

Web Services have no instance management, i.e. you cannot have a singleton web service, or a session-full web service. You can maintain state of web services but we all know how painful that is.

WCF while very similar to Web Services also incorporates many of the features of Remoting and .NET Enterprise Services (COM+ Services Components).

It is flexible and extensible like Remoting only more so.

It is compatible like Web Services only more so.

It is feature rich like Enterprise Services only more so

1) WCF can maintain transaction like COM+ Does

2) It can maintain state

3) It can control concurrency

4) It can be hosted on IIS, WAS, Self hosting, Windows services

5) It has AJAX Integration and JSON (JavaScript object notation) support

References:

http://www.code-magazine.com/article.aspx?quickid=0611051&page=1

Difference between web farm and web garden?

Web farm is a term that is also simply used to mean a business that performs Web site hosting on multiple servers

A multi-processor machine is a Web Garden. I guess this would

imply that the WebGarden attribute of processModel in Machine.config, needs

to be set to "true"? the two WebGarden scenarios are:

a. WebGarden="false"

1. This would mean that the aspnet worker process is utilizing only one CPU?

2. The %Processor Time counter would give a more optimistic read of the

situation than is the case, because it will average out the time over 2

processors when only one is being utilized?

b. WebGarden="true"

1. This implies i have to use out-of-process technique for doing session

state management, like the asp.net state service which i have heard is about

15% slower.

In summary, using a dual-processor machine is of benefit to asp.net only if

WebGarden is set to "true" and an out-of-process technique for session

management is being used.

Debugging Stored Procedures in SQL Server 2005

Pre-requisites

1. Find the .exe file under the directory, C:\Program Files\Microsoft SQL Server\90\Shared\1033\rdbgsetup.exe or similar path where the SQL Server was installed. The file rdbgsetup.exe stands for 'RemoteDeBuGsetup'. Look for the emphasis on letters. The filename reads rdbgsetup because, we are going to debug a stored procedures of a database available in some remote physical server. Either we need to sit at the server and debug them or we should be in a position to debug the stored procedure remotely. This action should be performed on the physical server where SQL Server 2005 was installed.

2. The user who debugs the stored procedure should be a member of SQL Server's fixed Server role, SysAdmin.

As a DBA, I may need to grant this privilege to the user who is in need of debugging a stored procedure. When I do that, I should trust the user so that he/she will not mess-up anything on the Server. Ok, I believe my users are trust worthy and I issue the following T-SQL command to assigning a fixer server role, SysAdmin.

The command to do so is as follows

EXEC master..sp_addsrvrolemember @loginame = N'&amp;amp;lt;YourLoginName either SQL Server Authentication or Windows Authentication&amp;amp;gt;', @rolename = N'sysadmin'

This can however be accomplished with SQL Server Management Studio's IDE. Perhaps, I prefer using T-SQL commands.

Note: The parameters to the stored procedure, sp_addsrvrolemember are of type, Nvarchar and it stands for (National Variable Character), which usually holds Unicode characters.

Now, we are all set for debugging stored procedures.

Process to Debug a stored procedure

1. Open the Microsoft Visual Studio 2005 IDE.

2. Select Server Explorer option from the View Menu.

3. From the Data Connections node, right click and select, 'Add connection'. Fill in the details to connect to the intended SQL Server and the database using the login who has a fixed server role, SysAdmin. Click on Test connection.

4. Expand the data connection just added, and navigate to the Stored Procedures node.

5. Expand the Stored Procedures node and select the intended SP to be debugged.

6. Right click and select open to view the source code of the selected stored procedure.

7. Place a break point on the intended line of code where debugging needs to be started its usually the way .NET Developers perform.

8. After performing the above steps the screen shot should look like the following.

10. Right click on the stored procedure from the 'Server Explorer' and select 'Step-Into Stored Procedure'.

12. Enter the needful values and click Ok.

13. From here on, its usual .NET debugging stuff. Use Step-Into and Step-Over and Step-out from the shortcuts menu or pressing F11,F10, Shift+F11

Wasn't that very simple. It made the life of DB developers much more comfortable. Had it not been available with SQL Server 2005 and VS 2005 IDE it would have been a nightmare to debug stored procedures remotely/locally.

Happy Development and concentrated debugging.

http://www.dotnetfunda.com/articles/article27.aspx

Wednesday, April 7, 2010

.Net Memory Management & Garbage Collection

The Microsoft .NET common language runtime requires that all resources be allocated from the managed heap. Objects are automatically freed when they are no longer needed by the application.

When a process is initialized, the runtime reserves a contiguous region of address space that initially has no storage allocated for it. This address space region is the managed heap. The heap also maintains a pointer. This pointer indicates where the next object is to be allocated within the heap. Initially, the pointer is set to the base address of the reserved address space region.

Garbage collection in the Microsoft .NET common language runtime environment completely absolves the developer from tracking memory usage and knowing when to free memory. However, you’ll want to understand how it works. So let’s do it.

Topics Covered

We will cover the following topics in the article

- Why memory matters

- .Net Memory and garbage collection

- Generational garbage collection

- Temporary Objects

- Large object heap & Fragmentation

- Finalization

- Memory problems

1) Why Memory Matters

Insufficient use of memory can impact

- Performance

- Stability

- Scalability

- Other applications

Hidden problem in code can cause

- Memory leaks

- Excessive memory usage

- Unnecessary performance overhead

2) .Net Memory and garbage collection

.Net manages memory automatically

- Creates objects into memory blocks(heaps)

- Destroy objects no longer in use

Allocates objects onto one of two heaps

- Small object heap(SOH) – objects < 85k

- Large object heap(LOH) – objects >= 85k

You allocate onto the heap whenever you use the “new” keyword in code

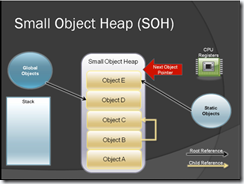

Small object heap (SOH)

- Allocation of objects < 85k – Contiguous heap – Objects allocated consecutively

- Next object pointer is maintained – Objects references held on Stack, Globals, Statics and CPU register

- Objects not in use are garbage collected

Figure-1

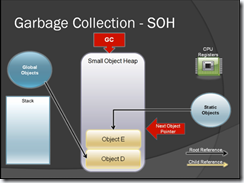

Next, How GC works in SOH?

GC Collect the objects based on the following rules:

- Reclaims memory from “rootless” objects

- Runs whenever memory usage reaches certain thresholds

- Identifies all objects still “in use”

- Has root reference

- Has an ancestor with a root reference

- Compacts the heap

- Copies “rooted” objects over rootless ones

- Resets the next object pointer

- Freezes all execution threads during GC

- Every GC runs it hit the performance of your app

Figure-2

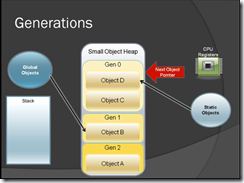

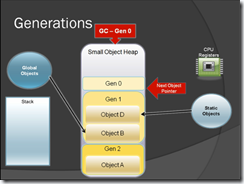

3) Generational garbage collection

Optimizing Garbage collection

- Newest object usually dies quickly

- Oldest object tend to stay alive

- GC groups objects into Generations

- Short lived – Gen 0

- Medium – Gen 1

- Long Lived – Gen 2

- When an object survives a GC it is promoted to the next generation

- GC compacts Gen 0 objects most often

- The more the GC runs the bigger the impact on performance

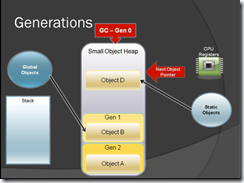

Figure-3

Figure-4

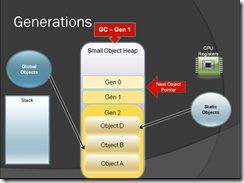

Here object C is no longer referenced by any one so when GC runs it get destroyed & Object D will be moved to the Gen 1 (see figure-5). Now Gen 0 has no object, so when next time when GC runs it will collect object from Gen 1.

Figure-5

Figure-6

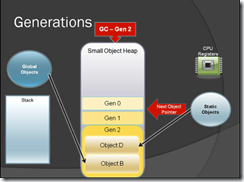

Here when GC runs it will move the object D & B to Gen 2 because it has been referenced by Global objects & Static objects.

Figure-7

Here when GC runs for Gen 2 it will find out that object A is no longer referenced by anyone so it will destroy it & frees his memory. Now Gen 2 has only object D & B.

Garbage collector runs when

- Gen 0 objects reach ~256k

- Gen 1 objects reach ~2Meg

- Gen 2 objects reach ~10Meg

- System memory is low

Most objects should die in Gen 0.

Impact on performance is very high when Gen 2 run because

- Entire small object heap is compacted

- Large object heap is collected

4) Temporary objects

- Once allocated objects can’t resize on a contiguous heap

- Objects such as strings are Immutable

- Can’t be changed, new versions created instead

- Heap fills with temporary objects

Let us take example to understand this scenario.

Figure – 8

After the GC runs all the temporary objects are destroyed.

Figure–9

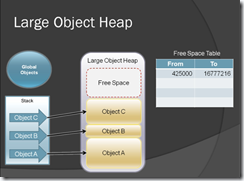

5) Large object heap & Fragmentation

Large object heap (LOH)

- Allocation of object >=85k

- Non contiguous heap

- Objects allocated using free space table

- Garbage collected when LOH Threshold Is reached

- Uses free space table to find where to allocate

- Memory can become fragmented

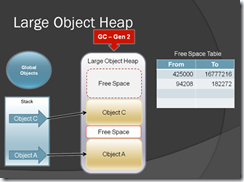

Figure-10

After object B is destroyed free space table will be filled with a memory address which has been available now.

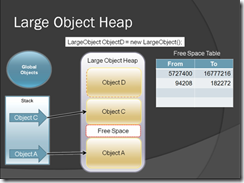

Figure-11

Now when you create new object, GC will check out which memory area is free or available for our new object in LOH. It will check out the Free space table & allocate object where it fit.

Figure-12

6) Object Finalization

- Disk, Network, UI resources need safe cleanup after use by .NET classes

- Object finalization guarantees cleanup code will be called before collection

- Finalizable object survive for at least 1 extra GC & often make it to Gen 2

- Finalizable classes have a

- Finalize method(c# or vb.net)

- C++ style destructor (c#)

Here are the guidelines that help you to decide when to use Finalize method:

- Only implement Finalize on objects that require finalization. There are performance costs associated with Finalize methods.

- If you require a Finalize method, you should consider implementing IDisposable to allow users of your class to avoid the cost of invoking the Finalize method.

- Do not make the Finalize method more visible. It should be protected, not public.

- An object’s Finalize method should free any external resources that the object owns. Moreover, a Finalize method should release only resources that are held onto by the object. The Finalize method should not reference any other objects.

- Do not directly call a Finalize method on an object other than the object’s base class. This is not a valid operation in the C# programming language.

- Call the base.Finalize method from an object’s Finalize method.

Note: The base class’s Finalize method is called automatically with the C# and the Managed Extensions for C++ destructor syntax.

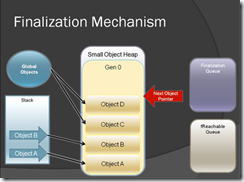

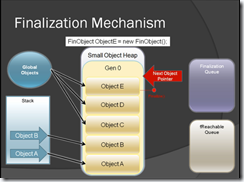

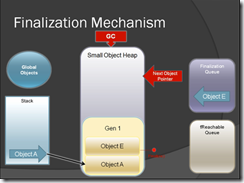

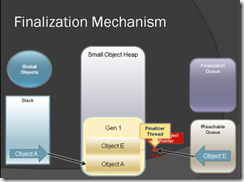

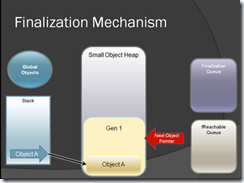

Let see one example to understand how the finalization works.

Each figure itself explain what is going on & you can clearly see how the finalization works when GC run.

Figure-13

Figure-14

Figure-15

Figure-16

Figure-17

For more information on Finalization refer the following links:

http://www.object-arts.com/docs/index.html?howdofinalizationandmourningactuallywork_.htm

http://blogs.msdn.com/cbrumme/archive/2004/02/20/77460.aspx

How to minimize overheads

Object size, number of objects, and object lifetime are all factors that impact your application’s allocation profile. While allocations are quick, the efficiency of garbage collection depends (among other things) on the generation being collected. Collecting small objects from Gen 0 is the most efficient form of garbage collection because Gen 0 is the smallest and typically fits in the CPU cache. In contrast, frequent collection of objects from Gen 2 is expensive. To identify when allocations occur, and which generations they occur in, observe your application’s allocation patterns by using an allocation profiler such as the CLR Profiler.

You can minimize overheads by:

- Avoid Calling GC.Collect

- Consider Using Weak References with Cached Data

- Prevent the Promotion of Short-Lived Objects

- Set Unneeded Member Variables to Null Before Making Long-Running Calls

- Minimize Hidden Allocations

- Avoid or Minimize Complex Object Graphs

- Avoid Preallocating and Chunking Memory

Read more: http://www.guidanceshare.com/wiki/.NET_2.0_Performance_Guidelines_-_Garbage_Collection

7) Common Memory Problems

- Excessive RAM footprint

- App allocates objects too early or for too long using more memory than needed

- Can affect other app on system

- Excessive temporary object allocation

- Garbage collection runs more frequently

- Executing threads freeze during garbage collection

- Memory leaks

- Overlooked root references keep objects alive (collections, arrays, session state, delegates/events etc)

- Incorrect or absent finalization can cause resource leaks

References & other useful articles related to this topic:

Hope this help

.Net Memory Management & Garbage Collection

Introduction

The Microsoft .NET common language runtime requires that all resources be allocated from the managed heap. Objects are automatically freed when they are no longer needed by the application.

When a process is initialized, the runtime reserves a contiguous region of address space that initially has no storage allocated for it. This address space region is the managed heap. The heap also maintains a pointer. This pointer indicates where the next object is to be allocated within the heap. Initially, the pointer is set to the base address of the reserved address space region.

Garbage collection in the Microsoft .NET common language runtime environment completely absolves the developer from tracking memory usage and knowing when to free memory. However, you’ll want to understand how it works. So let’s do it.

Topics Covered

We will cover the following topics in the article

- Why memory matters

- .Net Memory and garbage collection

- Generational garbage collection

- Temporary Objects

- Large object heap & Fragmentation

- Finalization

- Memory problems

1) Why Memory Matters

Insufficient use of memory can impact

- Performance

- Stability

- Scalability

- Other applications

Hidden problem in code can cause

- Memory leaks

- Excessive memory usage

- Unnecessary performance overhead

2) .Net Memory and garbage collection

.Net manages memory automatically

- Creates objects into memory blocks(heaps)

- Destroy objects no longer in use

Allocates objects onto one of two heaps

- Small object heap(SOH) – objects < 85k

- Large object heap(LOH) – objects >= 85k

You allocate onto the heap whenever you use the “new” keyword in code

Small object heap (SOH)

- Allocation of objects < 85k – Contiguous heap – Objects allocated consecutively

- Next object pointer is maintained – Objects references held on Stack, Globals, Statics and CPU register

- Objects not in use are garbage collected

Figure-1

Next, How GC works in SOH?

GC Collect the objects based on the following rules:

- Reclaims memory from “rootless” objects

- Runs whenever memory usage reaches certain thresholds

- Identifies all objects still “in use”

- Has root reference

- Has an ancestor with a root reference

- Compacts the heap

- Copies “rooted” objects over rootless ones

- Resets the next object pointer

- Freezes all execution threads during GC

- Every GC runs it hit the performance of your app

Figure-2

3) Generational garbage collection

Optimizing Garbage collection

- Newest object usually dies quickly

- Oldest object tend to stay alive

- GC groups objects into Generations

- Short lived – Gen 0

- Medium – Gen 1

- Long Lived – Gen 2

- When an object survives a GC it is promoted to the next generation

- GC compacts Gen 0 objects most often

- The more the GC runs the bigger the impact on performance

Figure-3

Figure-4

Here object C is no longer referenced by any one so when GC runs it get destroyed & Object D will be moved to the Gen 1 (see figure-5). Now Gen 0 has no object, so when next time when GC runs it will collect object from Gen 1.

Figure-5

Figure-6

Here when GC runs it will move the object D & B to Gen 2 because it has been referenced by Global objects & Static objects.

Figure-7

Here when GC runs for Gen 2 it will find out that object A is no longer referenced by anyone so it will destroy it & frees his memory. Now Gen 2 has only object D & B.

Garbage collector runs when

- Gen 0 objects reach ~256k

- Gen 1 objects reach ~2Meg

- Gen 2 objects reach ~10Meg

- System memory is low

Most objects should die in Gen 0.

Impact on performance is very high when Gen 2 run because

- Entire small object heap is compacted

- Large object heap is collected

4) Temporary objects

- Once allocated objects can’t resize on a contiguous heap

- Objects such as strings are Immutable

- Can’t be changed, new versions created instead

- Heap fills with temporary objects

Let us take example to understand this scenario.

Figure – 8

After the GC runs all the temporary objects are destroyed.

Figure–9

5) Large object heap & Fragmentation

Large object heap (LOH)

- Allocation of object >=85k

- Non contiguous heap

- Objects allocated using free space table

- Garbage collected when LOH Threshold Is reached

- Uses free space table to find where to allocate

- Memory can become fragmented

Figure-10

After object B is destroyed free space table will be filled with a memory address which has been available now.

Figure-11

Now when you create new object, GC will check out which memory area is free or available for our new object in LOH. It will check out the Free space table & allocate object where it fit.

Figure-12

6) Object Finalization

- Disk, Network, UI resources need safe cleanup after use by .NET classes

- Object finalization guarantees cleanup code will be called before collection

- Finalizable object survive for at least 1 extra GC & often make it to Gen 2

- Finalizable classes have a

- Finalize method(c# or vb.net)

- C++ style destructor (c#)

Here are the guidelines that help you to decide when to use Finalize method:

- Only implement Finalize on objects that require finalization. There are performance costs associated with Finalize methods.

- If you require a Finalize method, you should consider implementing IDisposable to allow users of your class to avoid the cost of invoking the Finalize method.

- Do not make the Finalize method more visible. It should be protected, not public.

- An object’s Finalize method should free any external resources that the object owns. Moreover, a Finalize method should release only resources that are held onto by the object. The Finalize method should not reference any other objects.

- Do not directly call a Finalize method on an object other than the object’s base class. This is not a valid operation in the C# programming language.

- Call the base.Finalize method from an object’s Finalize method.

Note: The base class’s Finalize method is called automatically with the C# and the Managed Extensions for C++ destructor syntax.

Let see one example to understand how the finalization works.

Each figure itself explain what is going on & you can clearly see how the finalization works when GC run.

Figure-13

Figure-14

Figure-15

Figure-16

Figure-17

For more information on Finalization refer the following links:

http://www.object-arts.com/docs/index.html?howdofinalizationandmourningactuallywork_.htm

http://blogs.msdn.com/cbrumme/archive/2004/02/20/77460.aspx

How to minimize overheads

Object size, number of objects, and object lifetime are all factors that impact your application’s allocation profile. While allocations are quick, the efficiency of garbage collection depends (among other things) on the generation being collected. Collecting small objects from Gen 0 is the most efficient form of garbage collection because Gen 0 is the smallest and typically fits in the CPU cache. In contrast, frequent collection of objects from Gen 2 is expensive. To identify when allocations occur, and which generations they occur in, observe your application’s allocation patterns by using an allocation profiler such as the CLR Profiler.

You can minimize overheads by:

- Avoid Calling GC.Collect

- Consider Using Weak References with Cached Data

- Prevent the Promotion of Short-Lived Objects

- Set Unneeded Member Variables to Null Before Making Long-Running Calls

- Minimize Hidden Allocations

- Avoid or Minimize Complex Object Graphs

- Avoid Preallocating and Chunking Memory

Read more: http://www.guidanceshare.com/wiki/.NET_2.0_Performance_Guidelines_-_Garbage_Collection

7) Common Memory Problems

- Excessive RAM footprint

- App allocates objects too early or for too long using more memory than needed

- Can affect other app on system

- Excessive temporary object allocation

- Garbage collection runs more frequently

- Executing threads freeze during garbage collection

- Memory leaks

- Overlooked root references keep objects alive (collections, arrays, session state, delegates/events etc)

- Incorrect or absent finalization can cause resource leaks

References & other useful articles related to this topic:

Hope this help

The out and ref Paramerter in C#

In this article, I will explain how do you use these parameters in your C# applications.

The out Parameter

The out parameter can be used to return the values in the same variable passed as a parameter of the method. Any changes made to the parameter will be reflected in the variable.

public class mathClass

{

public static int TestOut(out int iVal1, out int iVal2)

{

iVal1 = 10;

iVal2 = 20;

return 0;

}

public static void Main()

{

int i, j; // variable need not be initialized

Console.WriteLine(TestOut(out i, out j));

Console.WriteLine(i);

Console.WriteLine(j);

}

}

The ref parameter

The ref keyword on a method parameter causes a method to refer to the same variable that was passed as an input parameter for the same method. If you do any changes to the variable, they will be reflected in the variable.

You can even use ref for more than one method parameters.

namespace TestRefP

{

using System;

public class myClass

{

public static void RefTest(ref int iVal1 )

{

iVal1 += 2;

}

public static void Main()

{

int i; // variable need to be initialized

i = 3;

RefTest(ref i );

Console.WriteLine(i);

}

}

}

Tuesday, April 6, 2010

SQL Server Transaction Isolation Models

Normally, it's best to allow SQL Server to enforce isolation between transactions in its default manner; after all, isolation is one of the basic tenets of the ACID model . However, sometimes business requirements force database administrators to stray from the default behavior and adopt a less rigid approach to transaction isolation. To assist in such cases, SQL Server offers five different transaction isolation models. Before taking a detailed look at SQL Server's isolation models, we must first explore several of the database concurrency issues that they combat:

- Dirty Reads occur when one transaction reads data written by another, uncommitted, transaction. The danger with dirty reads is that the other transaction might never commit, leaving the original transaction with "dirty" data.

- Non-repeatable Reads occur when one transaction attempts to access the same data twice and a second transaction modifies the data between the first transaction's read attempts. This may cause the first transaction to read two different values for the same data, causing the original read to be non-repeatable

- Phantom Reads occur when one transaction accesses a range of data more than once and a second transaction inserts or deletes rows that fall within that range between the first transaction's read attempts. This can cause "phantom" rows to appear or disappear from the first transaction's perspective. SQL Server's isolation models each attempt to conquer a subset of these problems, providing database administrators with a way to balance transaction isolation and business requirements. The five SQL Server isolation models are:

- The Read Committed Isolation Model is SQL Server’s default behavior. In this model, the database does not allow transactions to read data written to a table by an uncommitted transaction. This model protects against dirty reads, but provides no protection against phantom reads or non-repeatable reads.

- The Read Uncommitted Isolation Model offers essentially no isolation between transactions. Any transaction can read data written by an uncommitted transaction. This leaves the transactions vulnerable to dirty reads, phantom reads and non-repeatable reads.

- The Repeatable Read Isolation Model goes a step further than the Read Committed model by preventing transactions from writing data that was read by another transaction until the reading transaction completes. This isolation model protect against both dirty reads and non-repeatable reads.

- The Serializable Isolation Model uses range locks to prevent transactions from inserting or deleting rows in a range being read by another transaction. The Serializable model protects against all three concurrency problems.

- The Snapshot Isolation Model also protects against all three concurrency problems, but does so in a different manner. It provides each transaction with a "snapshot" of the data it requests. The transaction may then access that snapshot for all future references, eliminating the need to return to the source table for potentially dirty data. If you need to change the isolation model in use by SQL Server, simply issue the command: SET TRANSACTION ISOLATION LEVEL

where is replaced with any of the following keywords:

READ COMMITTED

READ UNCOMMITTED

REPEATABLE READ

SERIALIZABLE

SNAPSHOT

UML 2.0 link

Diagram | Description | Learning Priority |

Depicts high-level business | High | |

Shows a collection of static | High | |

Shows instances of classes, | Low | |

Depicts the components that | Medium | |

Depicts the internal structure | Low | |

Shows the execution | Medium | |

A variant of an activity | Low | |

Depicts objects and their | Low | |

Shows how model elements are | Low | |

Models the sequential logic, in | High | |

Describes the states an object | Medium | |

Depicts the change in state or | Low | |

Shows use cases, actors, and | Medium |

http://www.agilemodeling.com/essays/umlDiagrams.htm

Monday, April 5, 2010

LINQ interview questions

LINQ is a set of extensions to .NET Framework that encapsulate language integrated query, set and other transformation operations. It extends VB, C# with their language syntax for queries. It also provides class libraries which allow a developer to take advantages of these features.

Difference between LINQ and Stored Procedures.

Stored procedures normally are faster as they have a predictable execution plan. Therefore, if a stored procedure is being executed for the second time, the database gets the cached execution plan to execute the stored procedure.

LINQ supports type safety against stored procedures.

LINQ supports abstraction which allows framework to add additional improvements like multi threading. It’s much simpler and easier to add this support through LINQ instead of stored procedures.

LINQ allows for debugging using .NET debugger, which is not possible in case of stored procedures.

LINQ supports multiple databases against stored procedures which need to be re-written for different databases.

Deploying LINQ based solution is much simpler than a set of stored procedures.

Pros and cons of LINQ (Language-Integrated Query)

Pros of LINQ:

Supports type safety

Supports abstraction and hence allows developers to extend features such as multi threading.

Easier to deploy

Simpler and easier to learn

Allows for debugging through .NET debugger.

Support for multiple databases

Cons of LINQ:

LINQ needs to process the complete query, which might have a performance impact in case of complex queries

LINQ is generic, whereas stored procedures etc can take full advantage of database features.

If there has been a change, the assembly needs to be recompiled and redeployed.

Disadvantages of LINQ over stored procedures:

LINQ needs to process the complete query, which might have a performance impact in case of complex queries against stored procedures which only need serialize sproc-name and argument data over the network.

LINQ is generic, whereas stored procedures etc can take full advantage of the complete database features.

If there has been a change, the assembly needs to be recompiled and redeployed whereas stored procedures are much simpler to update.

It’s much easier to restrict access to tables in database using stored procedures and ACL’s than through LINQ.

Can I use LINQ with databases other than SQL Server? Explain how

LINQ supports Objects, XML, SQL, Datasets and entities. One can use LINQ with other databases through LINQ to Objects or LINQ to Datasets, where the objects and datasets then take care of database specific operations and LINQ only needs to deal with those objects, not the database operations directly.

Choosing Between MVC and MVP Patterns in ASP.NET

Introduction

Lately, ASP.NET developers have been paying increasing attention to the architectural aspect of their applications. However, little effort usually is done to choose the right architectural solution. Developers often make a blind choice in favour of the Model-View-Controller pattern and, specifically, ASP.NET MVC framework, disregarding other possible solutions.

Nevertheless, alternative architectural solutions, such as Model-View-Presenter and corresponding frameworks (MVC#, for instance) do prove themselves more useful than MVC in many situations. That is why ASP.NET developers and architects should be aware of all advantages of MVP pattern over MVC and vice versa to choose the appropriate pattern to be used in a specific application. This article provides guidelines for ASP.NET developers on choosing the right pattern among MVC and MVP, concerning all major differences between them.

Choice criteria

1. UI library used

Most ASP.NET user interface controls rely on the server-side event model to process user gestures. When a user clicks some menu item or a button on a web page, a server-side event gets generated and then handled on default by a View class (Web form class). Thus, the View is the best place to receive user input for most ASP.NET UI libraries, both standard and third-party (such as DevExpress or Telerik Web controls).

However, as seen from the picture below, the Model-View-Controller pattern bypasses the standard ASP.NET request processing scheme, because the incoming gestures in MVC are received by the Controller. Moreover, the form of user requests in the ASP.NET MVC framework (which is based on a URI pattern) makes it difficult to adapt this framework to use with the conventional ASP.NET server-side event model. And, although there are a number of UI libraries compatible with MVC pattern (for example, the Yahoo UI library), generally MVC is not fully suitable for most ASP.NET UI libraries.

The Model-View-Presenter pattern, on the contrary, assumes the View to receive user gestures and then to delegate processing to the Controller. Such a scheme comfortably fits most ASP.NET UI libraries.

To sum up, a developer should consider whether the desired UI library relies on the standard ASP.NET server-side event model. If it does (which is most likely), MVP would probably be a better choice.

2. Controller-View linking

According to the Model-View-Presenter pattern, a Controller has a constant link to the associated View object. This allows a Controller to access its View at any moment, either by getting or setting some View properties.

The Model-View-Controller, on the other hand, provides limited capabilities for communication between the Controller and the View. MVC requires passing all data to the view in a single call, such as Render("OrdersView", ordersParam1, or ordersParam2).

Thus, if an application needs extensive communication between Controller and View objects, MVP is more preferable than MVC.

3. Portability to other UI platforms

Many applications are initially designed to work under tbe Windows platform, and later they get ported to the web environment. Other applications are constructed to operate under multiple UI front-ends from the very beginning. Anyway, portability to other presentation platforms is a question of great importance for numerous systems.

And again, MVP shows itself as a better choice because it is compatible with various UI platforms. For example, MVC# Framework currently supports Windows Forms and ASP.NET Web Forms technologies, and Silverlight with WPF is on the agenda. The MVC pattern, quite the contrary, is good mainly for web applications and hardly fits other presentations.

Therefore, if an application needs or later will need support for multiple GUI platforms, MVP would be the right solution.

4. Tasks support

The Task concept belongs neither to MVC nor to MVP paradigms. However, it is included in some MVC/MVP frameworks for its usefulness. Task is a set of views that a user traverses to fulfil some job. For example, an airline ticket booking task may consist of two views: one for choosing the flight, the other for entering personal information. Tasks often correspond to certain use cases of the system: There may be "Login to the system" and "Process order" use cases and tasks of the same names. Finally, a task may be associated with a state machine or a workflow: For example, a "Process order" task could be implemented with the "Process order" workflow in WWF.

A developer should consider whether tasks can help him build his application and accordingly choose a framework with support for tasks (such as MVC# or Ingenious MVC). Generally, the more complicated an application is, the more likely it could make use of the Task concept.

Summary

You have considered the main differences between two popular architectural patterns: Model-View-Controller and Model-View-Presenter, and have learned the reasons for choosing one of them. The article shows that MVP could be a better choice for ASP.NET applications in many scenarios, and developers should certainly consider MVP frameworks as good alternatives to existing MVC solutions.

http://www.codeguru.com/csharp/.net/net_general/patterns/article.php/c15173

WS-Specifications

Most of the specifications are still in the draft phase and subject to change but some products already exist that support certain features. WS-* specifications are designed to be used with the SOAP versions 1.1 and 1.2.

WS-Addressing

The Web Service Addressing specification is essential for the other WS-Specifications. A reference to a Web Service endpoint can be expressed and passed using WS-Addressing. WS-ReliableMessaging and WS-Coordination are using descriptions of Web Service endpoints. Besides the construct of endpoint references, the specification provides message information headers.

WS-Policy

WS-Policy can express requirements, capabilities and assertions. For example, a policy can indicate that a Web Service only accepts requests containing a valid signature or a certain message size should not be exceeded. How a policy can be obtained is out of the scope of this specification. WS-MetadataExcange and WS-PolicyAttachment specify how policies are accessible through SOAP messages or associated with XML and WSDL documents.

WS-PolicyAttachment

The description of requirements or capabilities of a Web Service, expressed in WS-Policy, has to be associated with the Web Service in order to consider the policy before invoking the service. Web Services Policy Attachment specifies how policies can be associated with XML and WSDL or registered in UDDI registries.

WS-MetadataExchange

Metadata in WSDL documents, XML Schemas and WS-Policies is useful to invoke a Web Service successfully. How to retrieve this information about service endpoints is the subject of the Web Service Metadata Exchange specification.

WS-Resource Framework (WSRF)

The previous Web Services standards treated state like a stepchild or omitted it completely. A different approach to Web Services the "representational state transfer" architecture managed server-side data as resources. The WS-Resource Framework introduces state, in the form of resources, to SOAP-based Web Services. WSRF introduces a design pattern that describes how to access resources with Web Services. The WS-Resource Framework constitutes a refactoring of the Grid Forum's Open Grid Services Infrastructure (OGSI). WSRF is broken down into a family of composable specifications. Applications of the Resource Framework are Grid Computing and Systems Management.

WS-Notification

WS-Notification specifies how to use Publish-Subscribe messaging with Web Services. It exploits the WS-Resource Framework and Web Services.

WS-Inspection

Web Services that are offered by a website can be published using a WS-Inspection document. A WS-Inspection document provides references to service descriptions like UDDI entries or WSDL service descriptions. WS-Inspection does not compete with UDDI. On the contrary, it is a form of description at the point-of-offering which works in conjunction with UDDI.

WS-Security or Web Services Security (WSS)

Security is a requirement for adopting Web Services in critical applications. The integrity and confidentiality of messages must be guaranteed. Furthermore, the identity of the participating parties shout be proofed. The Web Services Security Language, or WS-Security for short, is a base for the implementation of a wide range of security solutions. In April 2004, the Organization for the Advancement of Structured Information Standards (OASIS) ratified WS-Security as a standard under the name OASIS Web Services Security (WSS).

Signing and encryption of SOAP messages as well as the propagation of security tokens is supported by WS-Security. WS-Security leverages the XML Signature and XML Encryption standards by the W3C. Almost all WS-Specifications can be used in conjunction with WS-Security. For example, a

wsp:Policy

element inside a SOAP header should be signed to prevent tampering. WS-SecureConversations and WS-Trust are layered on top of WS-Security.

WS-SecureConversation

If more than two messages are exchanged, a security context might be more suitable than using WS-Security. Mechanisms for establishing and sharing security contexts as well as deriving session keys from securtiy contexts are the subject of Web Service Secure Conversation. To derive a new key a shared secret, a label and a seed are necessary.

WS-Trust

WS-Trust is an extension of the WS-Security specification. The extension allows to request , issue and exchange security tokens between Web Services and Security Token Services.

WS-ReliableMessaging

A sequence of messages can be reliably delivered using WS-ReliableMessaging. The receiver acknowledges received messages and asks for redelivery of lost messages.

WS-Reliability

This specification is the counterpart to WS-ReliableMessaging. WS-Reliability was created by Sun Microsystems, Oracle, Sonic Software and others. Its goals- guaranteed delivery, duplicate message elimination and message ordering- are identical to those of WS-ReliableMessaging. WS-Reliability was submitted to the OASIS standard body as an open standard. Both standards should converge into one because only one specification for reliable messaging is needed.

WS-Coordination

WS-Coordination is an extensible framework for the establishment of coordination between Web Services and coordinators. Different kinds of coordination types can be defined. Each coordination type can have multiple coordination protocols. Contexts used for transactions or security can be created and associated with messages. A context contains a reference to a registration service. WS-Transaction leverages the WS-Coordination specification.

WS-Transaction

The WS-Transaction specification is obsolated by the WS-AtomicTransaction and the WS-BuisnessActivity specification.

WS-AtomicTransaction

The Web Service Atomic Transaction specification describes transactions that have all or nothing semantics. Because the resources involved in the transaction are locked during the transaction, the transaction should span a short time. If more than two resources take part in a transaction, the two phase commit protocol (2PC) comes into play to coordinate the participants.

The protocols of proprietary transaction monitors can be wrapped in order to operate in a Web Services enviroment.

WS-BuisnessActivity

Long running transactions are different from short-time transactions such as database transactions. In a Web Service environment, a transaction can span several days so locking the resources is not recommended. Actions are excuted immediatedly and changes concerning data are made permanent. In the case of an error, actions are taken to compensate the already commited modificaitons.

The Web Service Buisness Activity specification covering long running transactions will replace Part II of the obsolete WS-Transaction specification. At this time, the WS-BuisnessActivity specification does not yet exist.

WS-Routing

The path of a SOAP message from the initial sender to the ultimate receiver via a set of optional intermediaries can be expressed in the SOAP header using the Web Services Routing Protocol. The reverse path can be expressed in this way as well. The specification enables several message exchange patterns like request/response, message acknowledgement and peer-to-peer conversation.

WS-Federation

Sophisticated Web Service scenarios can span over several Web Services located in different security domains. It´s annoying for an user or a Web Service application to prove and validate its identity against every service. A federation virtually enables the seamless access over Web Services in different domains. WS-Federation not only provides the transparent propagation of identities, but provides the propagation of arbitrary attributes too.

WS-Eventing

An infrastructure for asynchronous messages exchange is a building block for complex distributed applications. WS-Eventing enables the use of the Observer Design Pattern for Web Services. The Web Services Eventing Protocol defines messages to subscribe with an event source, to end a subscription, and to send notifications about events.

Summary

There is still a large gap between the rich features of CORBA and SOAP based Web Services. The WS-* specifications will help fill the gap. However, most of the WS-* specifications are still in early stages. The essential WS-Security specification is the building block for most applications and is quite mature for using today. A very promising specification is WS-Addressing because it is needed to pass an endpoint reference from one service to another.

The major companies in the Web Services business are behind the specifications so there is high probability of a big market share.

Difference between Silverlight and WPF

Silverlight is a Microsoft technology, competing with Adobes Flash and is meant for developing rich browser based internet applications.

WPF is a Microsoft technology meant for developing enhanced graphics applications for desktop platform. In addition, WPF applications can be hosted on web browsers which offers rich graphics features for web applications. Web Browser Appliactions (WBA) developed on WPF technology uses XAML to host user interface for browser applications. XAML stands for eXtended Application Markup Language which is a new declarative programming model from Microsoft. XAML files are hosted as descrete files in the Web server, but are downloaded to the browsers and converted to user interface by the .NET runtime in the client browsers.

WPF runs on .NET runtime and developers can take advantage of the rich .NET Framework and WPF libraries to build really cool windows applications. WPF supports 3-D graphics, complex animations, hardware acceleration etc.